The “reading wars”---defined loosely as the conflict between whole-language instruction and the science of reading---are making headlines.

This debate is not new. California went through a nearly identical “reading war” in the 1990s. Since NAEP scores were first reported in 1969, we’ve been wringing our hands at American students’ low reading proficiency and lack of progress. Moreover, neuroscientists have been warning for many years that the whole-language approach is less effective than phonics, and may in fact harm some struggling readers.

Thankfully, the science of reading is now gaining in popularity, as both educators and edtech leaders embrace those principles in their curriculum choices and product development, respectively.

But what I want to understand is: why did the whole-language model have so much staying power?

I believe that the lack of high-quality, high-frequency data on reading proficiency allowed whole-language instruction to persist much longer than it should have. Put differently, measuring the impact of teaching on learning requires data (qualitative and quantitative). Without data, you can't evaluate the merits of an instructional approach. We simply did not have enough reading data, outside of psychologists' and neuroscientists' labs, to show that whole-language instruction specifically wasn't working.

A Quick Review of the Basics

Reading is a modern construct, created by humans to share knowledge. Unlike walking and talking, reading is not a natural instinct honed by evolution. In other words, every person has to actively learn to read. (This is the key error in the whole-language theory, which maintains that reading happens more or less naturally.)

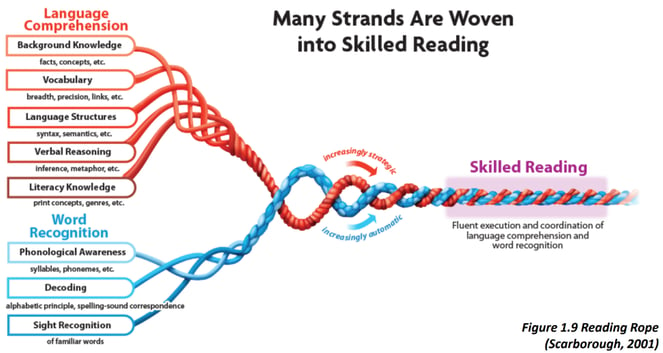

Becoming a good reader requires a mastery of several underlying concepts. Given that reading must be learned, and that this learning process is complex, teachers play a very important role in building literacy proficiency.

Source: https://dyslexiaida.org/event/a-20th-year-celebration-of-scarboroughs-reading-rope/

During their preparation programs, teachers come across two theories of how to teach children to read: the science of reading and whole-language instruction. (Note: a third option, balanced instruction, combines the two). The science of reading emphasizes connecting letters to sounds and sounds to words, and builds on the robust neuroscience evidence on how people learn to read—even those with learning differences like dyslexia. Whole-language instruction focuses on the joy of reading but skips some of the important building blocks like phonics and phonemic awareness.

Why Did Whole-Language Instruction Persist?

Whole-language instruction is under intense scrutiny of late and may finally be on its way out. But it lasted far too long. What gave the whole-language approach such longevity?

Several large-scale issues enabled the persistence of whole-language methods. Schools of education included it in their coursework for new teachers and reinforced the approach. Curriculum companies selling whole-language instruction materials had significant market penetration. Inequities in our education system, underfunding, Covid, and a myriad of other problems further complicated our understanding of why reading scores were low.

The underappreciated factor that I believe increased the staying power of whole-language instruction is the absence of real-time data on student reading progress. This "reading data vacuum" has many causes:

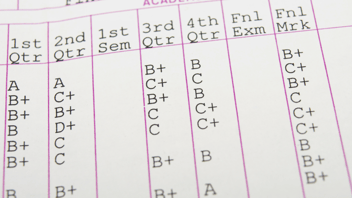

- Children learn the basics of reading in kindergarten through 2nd grade. Ideally, you would measure and closely track kids’ reading progression in those early years to course correct early and often. But this data is hard to come by. For starters, state testing for English Language Arts (ELA) doesn’t start until the end of 3rd grade. Alternative measures of reading success in k-2, such as report cards, are generally less informative and too broad (what does a letter grade really mean for a 6-year-old?). For a lot of children who are reading below grade level, the severity of the gap is unknown until the summer after 3rd grade when their test scores finally arrive.

- State ELA tests measure reading only once, at the end of the academic year. This type of summative data encourages backwards-looking analyses of things that happened last year, rather than active, forward-looking changes for current students.

- Until recently, not only the curriculum but also many reading assessments were tied to the flawed whole-language framework, thereby producing inaccurate measures of reading proficiency. In other words, if you are measuring indicators of whole-language reading growth, you won’t have data on the more meaningful and predictive building blocks of reading development like phonics.

- Results on more frequent (formative, low stakes) reading assessments, administered by teachers, often stay at the classroom level. It is difficult for teachers to share that data with others, and for administrators to then aggregate it to the school or district level to see trends and the big picture.

- Schools’ early warning systems that do leverage high-velocity data focus on absenteeism and graduation, not reading proficiency. For example, schools have multiple processes in place to track daily absenteeism (not least because schools' funding is tied to attendance). Some districts also have predictive graduation "models" for students who are getting too many Ds and Fs to graduate or are short credits. There is no equivalent alarm system for literacy.

In short, detailed information on reading progression, especially in the early grades, has been mostly a black box for the last several decades. Without the right granularity and regularity for reading data, teachers and administrators were unable to see and appreciate the direct effects of whole-language instruction on students. While it was clear that ELA proficiency measured on state tests was low and/or stagnating, it was easy to focus on big causes like poverty, and to overlook the impact of the instructional approach happening in classrooms. The lack of data also allowed teachers to push back against phonics (as they did in California) because, again, proving whole-language instruction was a significant problem was very hard. The consequence of all this cannot be understated: many Americans (adults and children) just don't read well.

What’s Next?

Early reading assessments that are built on science of reading principles offer a promising way forward. Newer digital tools like Literably or Readlee in particular can provide rich and frequent data. Older software like Acadience, iReady, and NWEA Map are also valuable aides in this effort to assess reading skills in elementary (and beyond).

Data, however, is not a panacea; we have to get other things right too. First, we must use the reading data to make different instructional and intervention decisions. Districts can leverage external tools (like Schoolytics) to make data access and analysis easier and faster. Second, we should reinforce a culture of evaluation for improvement, wherein curiosity and inquiry are encouraged, even if that means exposing failure. And lastly, we must use data to test and confirm our hypothesis that the science of reading approaches work as hoped, so that we don’t repeat the mistakes of the past.

Interestingly, I am currently witnessing this exact data and literacy transformation in my own district where I'm a school board member. Thanks to a strong initiative by district leaders and teachers, combined with the adoption of rigorous assessments to benchmark every student, we are transitioning from having no district-wide assessments in k-2 and knowing very little, to having a treasure trove of information across multiple reading dimensions. As teachers put their newfound insights to work in their lesson plans and differentiation strategies, I am eagerly anticipating a rise in reading comprehension and other positive benefits to subjects like science and math as well. I'm optimistic for a future where all students master the literacy skills needed for their lifelong learning journey.

.jpg?width=1160&height=652&name=literacy%20crisis%20hero%20image%20(2).jpg)